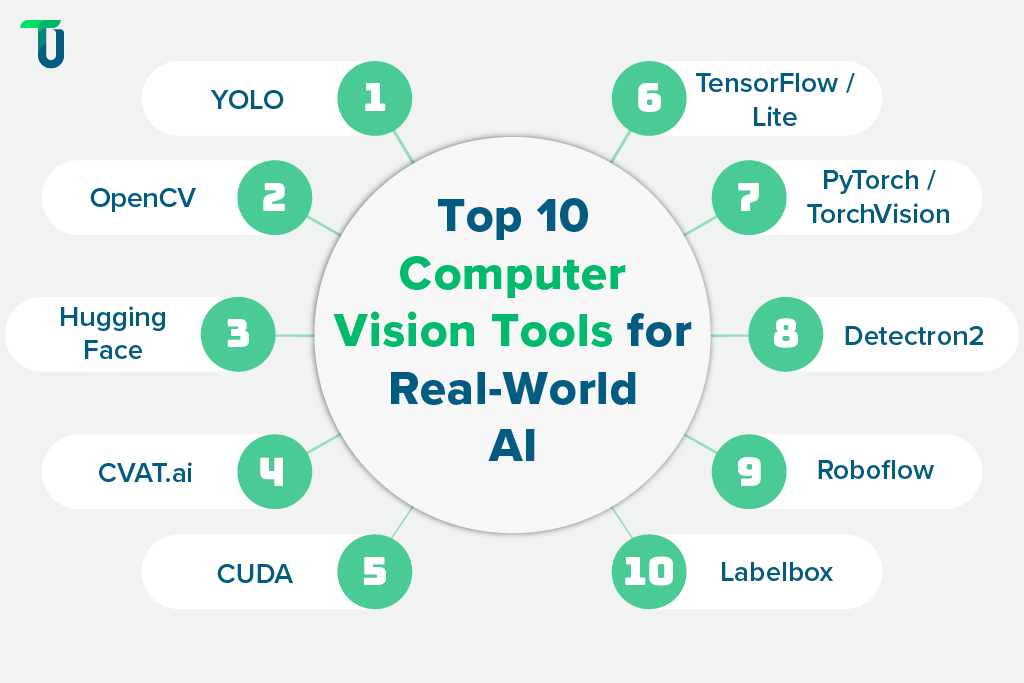

Top 10 Computer Vision Tools and Platforms in 2025

Choosing the right computer vision tools can make or break your AI vision system. Here’s a curated list of the top platforms dominating real-world deployments in 2025.

1. YOLO (You Only Look Once)

YOLO is a real-time object detection model. It processes the entire image in a single shot instead of scanning it region by region. This makes it incredibly fast while still being accurate enough for most use cases.

Why does it matter for computer vision systems?

Speed is a game-changer in many AI vision solutions. Whether it’s a surveillance feed or an industrial inspection system, decisions often need to happen in milliseconds. YOLO lets you deploy real-time inference without a heavy processing load.

Best for:

- Real-time object detection

- Smart city systems

- Traffic and crowd analytics

- Fast-response CV deployments

At TenUp, we used YOLO-based vision models to detect players, cards, and game elements in real-time for a live-streamed fantasy league platform. This enabled fast, accurate object recognition as the foundation for real-time insights and enhanced user engagement.

2. OpenCV

OpenCV is the open-source backbone of the computer vision world. It includes over 2,500 optimized algorithms for real-time image processing and analysis.

Why does this computer vision technology matter?

While deep learning models get the spotlight, traditional image processing still handles a massive chunk of real-world Computer Vision (CV) tasks. OpenCV is irreplaceable for pre-processing, post-processing, and basic computer vision tasks such as realistic car shadow generation in automotive image workflows.

Best for:

- Foundational CV workflows

- Camera feed adjustments

- Image enhancement and transformations

- Integrating with hardware (e.g., CCTV, sensors)

In our background removal solution for a visual content platform, we used OpenCV alongside BiRefNet to handle precise image refinements, like transparent window edges and drop shadows, at 4K resolution.

3. Hugging Face Transformers for Vision

This framework brings transformer-based models to visual tasks. You can build vision-language models (VLMs) that understand both images and text.

Why does it matter for AI vision systems?

Enterprise use cases are becoming more multimodal. Think automated insurance claims from photos plus incident descriptions. Think product search, where users upload a picture and type a query. This tool lets you do that with ease.

Best for:

- Image captioning

- Visual search

- Multimodal AI experiences

- Visual Q&A bots

We used Hugging Face to streamline access to pre-trained vision models and support reproducible model evaluation. It enabled faster experimentation with open-source architectures like BiRefNet while keeping the pipeline modular and scalable, in our image background removal project.

4. CVAT.ai (Computer Vision Annotation Tool)

CVAT is a robust data annotation platform. It lets you create high-quality datasets for training AI vision models with bounding boxes, segmentation masks, keypoints, and more.

Why does it matter for computer vision solutions?

Most companies underestimate the power of clean, labeled data and understanding the right data annotation methods is essential for building production-ready AI models. If your annotations are sloppy, your model will never reach production-level accuracy. CVAT makes annotation collaborative, scalable, and auditable.

Best for:

- Data annotation at scale

- Preparing datasets for object detection or segmentation

- Building custom CV pipelines from scratch

At TenUp, we used CVAT.ai to annotate 20,000 4K-resolution images across 37 car compositions, in our automobile image background removal and replacement project. This helped us complete the image annotation task in under two weeks with a team of 10 annotators — a process that mirrors how How To Automate Sorting & Cut Errors by 40% in logistics depends on well-labeled training data to achieve production-ready accuracy.

5. CUDA by NVIDIA

CUDA enables parallel processing on NVIDIA GPUs. It’s the tech that lets you run large deep learning models or process hundreds of frames per second without crashing your system.

Why does it matter for AI vision systems?

Without CUDA, most real-time CV applications would be stuck in slow motion. Whether you are training or deploying, CUDA boosts speed and responsiveness.

Best for:

- GPU acceleration

- Edge AI and real-time processing

- High-performance inference pipelines

In our background removal project for ultra-high-resolution automotive images, we used five A100 GPUs with CUDA 12.6 to train deep learning models at scale. This setup enabled fast, distributed training across 20,000 4K images—delivering real-time performance without compromising accuracy.

6. TensorFlow and TensorFlow Lite

TensorFlow is Google’s deep learning framework. TensorFlow Lite is its lightweight sibling designed for mobile and edge deployments.

Why does it matter for computer vision tools?

You get end-to-end flexibility. Train a model in TensorFlow. Optimize and deploy it with TensorFlow Lite. This reduces dev time and makes scaling easier.

Best for:

- Deep learning model development

- CV applications across mobile and embedded systems

- Scalable deployments with minimal refactoring

7. PyTorch and TorchVision

PyTorch is a developer-friendly ML framework. TorchVision provides pre-built models and tools tailored for computer vision use cases.

Why does it matter for computer vision systems?

It’s flexible, easy to debug, and ideal for R&D teams building and testing custom CV models. The ecosystem is strong and developer-first.

Best for:

- Experimental CV models

- Prototyping with custom datasets

- Quick iterations before production

8. Detectron2

Developed by Meta AI, Detectron2 is a top-tier tool for tasks like instance segmentation, keypoint detection, and object detection.

Why does this computer vision technology matter?

When pixel-level accuracy is non-negotiable, Detectron2 delivers. It is widely used in both academic research and high-risk industry applications.

Best for:

- Instance segmentation

- Advanced object detection

- Precision-driven vision use cases

9. Roboflow

Roboflow offers a unified platform to manage the entire computer vision lifecycle—from dataset creation to model training and deployment.

Why does it matter for tools for computer vision?

It reduces friction between stages. You can upload raw images, annotate, train models, and deploy APIs from a single dashboard. It’s ideal for teams that want to move fast.

Best for:

- MVPs and rapid prototyping

- Streamlined training workflows

- Teams with limited in-house CV expertise

10. Labelbox

Labelbox is a data labeling and dataset management tool built for scale. It comes with analytics, QA workflows, and flexible labeling options.

Why does it matter for computer vision solutions?

It ensures that your data is not just labeled but audited, versioned, and analyzed. This is critical for high-stakes applications in insurance, medical imaging, and autonomous vehicles.

Best for:

- Enterprise-grade dataset labeling

- High-volume, regulated CV projects

- Human-in-the-loop AI systems

Vision That Works Beyond the Demo

Let TenUp help you build computer vision systems that scale, adapt, and deliver measurable value.

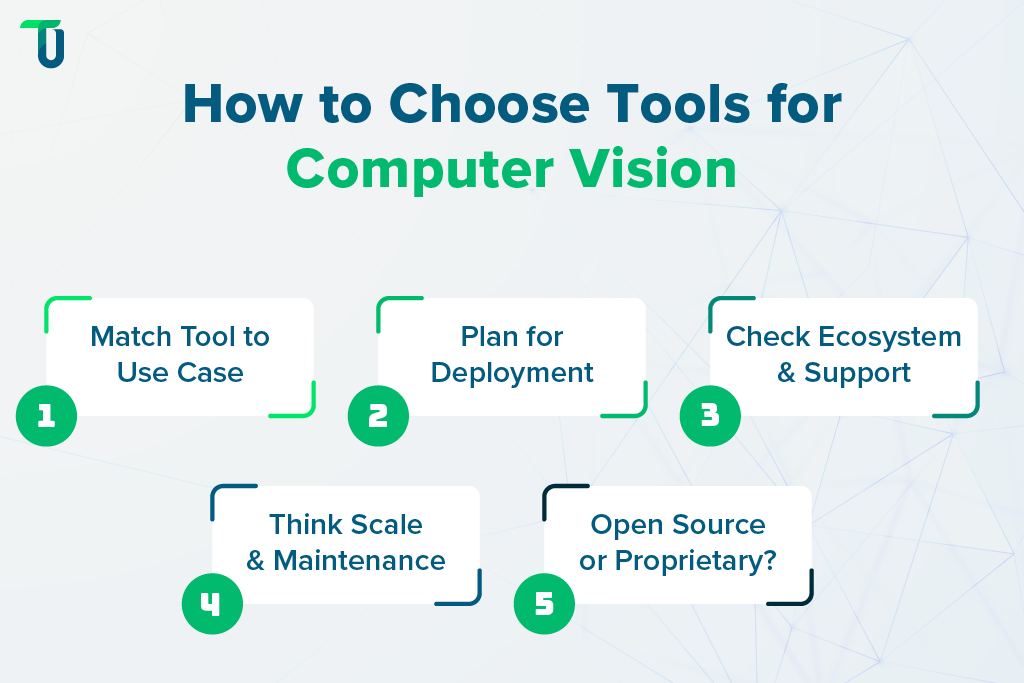

How to Choose the Right Computer Vision Tool

There’s no one-size-fits-all when it comes to selecting tools for computer vision. What works for a real-time surveillance system may fall short in a healthcare imaging solution. The right pick depends on your use case, your infrastructure, and how fast you need to go from prototype to production. Here’s what to evaluate before you invest time or budget:

1. Start with Your Use Case

Are you detecting objects, classifying images, or segmenting defects down to the pixel? Your goal determines the type of model and tool you need, and leveraging image segmentation tools can help unlock measurable ROI across industries. YOLO is great for speed. Detectron2 gives you surgical precision. Don’t choose based on popularity. Choose based on problem fit.

2. Factor in Deployment Needs

Will your computer vision system run in the cloud, on the edge, or offline on mobile? TensorFlow Lite and PyTorch Mobile are better suited for edge deployments. CUDA gives you the horsepower to run real-time inference in low-latency environments.

3. Look at Ecosystem and Support

Some platforms come with rich documentation and pre-trained models. Others rely heavily on community support. If your in-house team is small, consider tools like Roboflow or Hugging Face that offer end-to-end pipelines and strong dev support.

4. Evaluate Scalability and Maintainability

Will the model evolve with new data? Can you retrain and redeploy easily? OpenCV might get you started fast, but tools like TensorFlow and PyTorch offer better long-term scalability for enterprise-grade computer vision solutions .

5. Weigh Open Source vs Proprietary

Open-source tools offer flexibility and lower cost, but they require strong technical skills. Proprietary platforms like Labelbox bring analytics, quality assurance, and faster deployment, but come with licensing costs. Choose based on your team’s maturity and project risk.

Still not sure what to pick? Choosing tools is just one part of the journey. For a deeper dive into building scalable computer vision solutions, from planning and datasets to architecture and deployment, read our Complete Guide to Computer Vision Solutions.

The Future of Computer Vision Tools

Computer vision isn’t just advancing and the demand for Enterprise computer vision solutions is growing across industries . It’s transforming the way enterprises operate. From static detection to dynamic, context-aware analysis, the next generation of tools is smarter, faster, and deeply integrated into business workflows. Here's what the future looks like:

Foundation Models Will Power Custom Vision

Instead of building vision systems entirely from scratch, enterprises will increasingly rely on foundation models combined with MLOps workflow automation for AI models to fine-tune, retrain, and adapt models efficiently for new use cases. They are large, pre-trained models like CLIP, Segment Anything Model (SAM), and Google’s Gemini. Fine-tuning foundation models on a modest amount of domain-specific data enables rapid adaptation to a wide range of custom use cases and tasks like defect detection, medical imaging, etc. This significantly reduces both development cycles and labeled data requirements.

Edge AI Will Go Mainstream

Real‑time processing on devices without needing constant cloud access will be essential for industries where every millisecond matters. For instance, Edge AI-enabled drones and sensors can help detect disease or nutrient stress on crops in the field and trigger immediate interventions. Even in healthcare and industrial settings, edge devices can run fall‑detection, robotic assistance, or quality inspection systems locally. This improves responsiveness and reliability even when latency is poor.

No-Code and Low-Code Vision Platforms Will Take Over

Teams with limited or no expertise in AI development will be able to build and deploy computer vision workflows using drag‑and‑drop interfaces. No‑code vision platforms like Roboflow, Clarifai, etc., are already reducing reliance on developers by offering visual pipelines and pre‑built modules, empowering business users to prototype and iterate rapidly. This will democratize vision AI across business units, enabling departments like operations, quality control, or marketing to develop solutions.

Vision Systems Will Get Context-Aware

Future tools won’t just detect objects. They will understand behavior, intent, and even emotions, making decisions more accurate and timely. Context‑aware models combine visual input with modalities like audio, GPS, or temporal data to infer intent or emotional state. For example, vision systems will build capabilities to detect distracted driving or stress patterns. Further, more adaptive systems with smarter interfaces will emerge for advanced detection tasks like analyzing facial micro‑expressions and body posture.

Hybrid Deployments Will Become the Norm

Enterprises will use a mix of on‑device, edge, and cloud processing to balance performance, scalability, and cost. Lightweight vision inference runs on the Edge or embedded hardware for speed, whereas the cloud handles model training, analytics, and fallback scenarios. Think global supply chain management or smart city deployments, where thousands of cameras and sensors must synchronize in real-time and report to central systems for long-term analysis.

Security and Compliance Will Be Built In

Evolving regulations such as HIPAA in the US and GDPR in Europe require automation-ready privacy safeguards, like on-device anonymization, encrypted overlays, and transparent audit trails. Modern vision tools now ship with built-in controls for data minimization, access management, and automated compliance reporting. Leading vendors increasingly promote “privacy by design” as a default, rather than an afterthought, in their computer vision SDKs and platforms.

Interoperability Will Drive Ecosystem-Level Insights

Enterprises are already integrating vision systems with ERP, CRM, MES, and IoT platforms to break data silos. This helps them generate insights that combine visual, transactional, and sensor data for actionable intelligence. For example, linking visual defect detection on a factory floor to automatic reordering of parts, or correlating traffic analytics with retail footfall and sales. The Open Neural Network Exchange (ONNX) and RESTful APIs facilitate such cross-platform interoperability.

The next wave of computer vision tools will not just help you see better. They will help you decide smarter. Now is the time to invest in solutions that align with your long-term business goals.

Built to Scale, Engineered to Deliver: Why Enterprises Trust TenUp for Computer Vision Solutions

Selecting the right partner matters as much as choosing the right computer vision tools. TenUp Software Services offers full-stack AI engineering services and proven technical leadership to ensure your vision projects succeed from day one:

Comprehensive AI Engineering

TenUp’s AI team specializes in end-to-end ML pipelines, from model development and deployment to managing AI-powered applications, covering both cloud and edge deployments.

Checkout our case study on fish identification app development, where we deployed custom image recognition models on Android and iOS, enabling real-time fish species detection on edge.

Proven Production Expertise

We help enterprises move beyond pilots by delivering models ready for real-world environments. Our AI services include deep learning, computer vision, NLP, and managed AI solutions.

One example: we build a GenAI-powered iPad app for a premium logistics client, automating item profiling and condition reporting with multilingual support and natural language search.

Structured DataOps & Quality

With expertise in data engineering and DevOps, TenUp ensures your computer vision systems are built on reliable, high-quality datasets and robust pipelines.

For one client, we built centralized warehouse management software across multiple portfolio companies, using AI for image-based damage detection and reliable data pipelines for real-time analytics.

Enterprise-Grade Excellence

From digital transformation to secure cloud and app lifecycle management, TenUp brings the enterprise rigor needed for scalable, compliant, and maintainable AI deployments.

We brought the same enterprise discipline to a US-based fluid systems distributor, building AI-powered Purchase Order Automation Software that integrated Zendesk, SAP, and custom AI agents for accuracy, speed, and scale.

If you're looking to build computer vision solutions that don’t just work in demos but deliver real impact at scale, start by exploring how to Get a Custom Model for Parcel Damage Detection to unlock measurable ROI in logistics operations.

Ready to Build What Off-the-Shelf Computer Vision Tools Can’t?

TenUp helps you create custom computer vision systems that fit your business, not the other way around.

Frequently asked questions

What are the key differences between traditional computer vision tools and AI-based vision platforms?

Traditional vision tools rely on manually coded rules and feature engineering (e.g., OpenCV), making them suitable for simple, static tasks. AI-based platforms use deep learning to automatically learn patterns from data, adapt to variability, and scale across complex use cases like object detection or segmentation.

How do no-code computer vision tools compare to custom-built solutions for enterprise use?

No-code tools enable rapid prototyping with minimal expertise—great for MVPs or simple workflows. Custom-built solutions offer greater control, precision, and scalability—essential for complex, regulated, or high-risk enterprise use cases.

Which computer vision tools are best suited for small teams or startups with limited AI expertise?

Tools like Roboflow, LandingLens, and Labelbox offer no-code interfaces, pre-trained models, and end-to-end workflows—ideal for startups building MVPs without in-house ML teams. For basic tasks, Google Cloud Vision API or OpenCV (with light coding) are also beginner-friendly and scalable.

Can I deploy computer vision tools on edge devices without losing accuracy?

Yes—tools like TensorFlow Lite, NVIDIA Jetson, and OpenVINO enable real-time, accurate vision processing on edge devices. With model optimization techniques like pruning and quantization, you can maintain high performance even on low-power hardware.

How do data annotation platforms impact the accuracy of computer vision models?

High-quality annotations are the foundation of accurate vision models. Platforms like CVAT and Labelbox enhance precision by offering structured workflows, version control, and QA tools—ensuring cleaner training data and more reliable real-world performance.

Are there open-source computer vision tools that can match enterprise-grade platforms?

Yes—frameworks like Detectron2, YOLOv8, and MMDetection offer state-of-the-art performance rivaling commercial platforms. With proper tuning, they match enterprise-grade results, though they may require more setup, customization, and ongoing maintenance.

How are computer vision tools being integrated with IoT and robotics in 2025?

In 2025, computer vision tools integrate seamlessly with IoT and robotics via APIs and edge AI, enabling real-time automation, smart navigation, and visual analytics in factories, healthcare, and smart cities. This fusion drives autonomy, faster decisions, and adaptive machines.

What are the top security and compliance risks in using computer vision tools for enterprise applications?

Key risks include biometric privacy, AI bias, and regulatory non-compliance (e.g., GDPR, HIPAA). Enterprise CV systems must guard against data breaches, adversarial attacks, and misuse—especially in sensitive sectors like healthcare, finance, and surveillance.