Understanding Object Detection AI in Autonomous Vehicles

What Object Detection AI Actually Does on the Road?

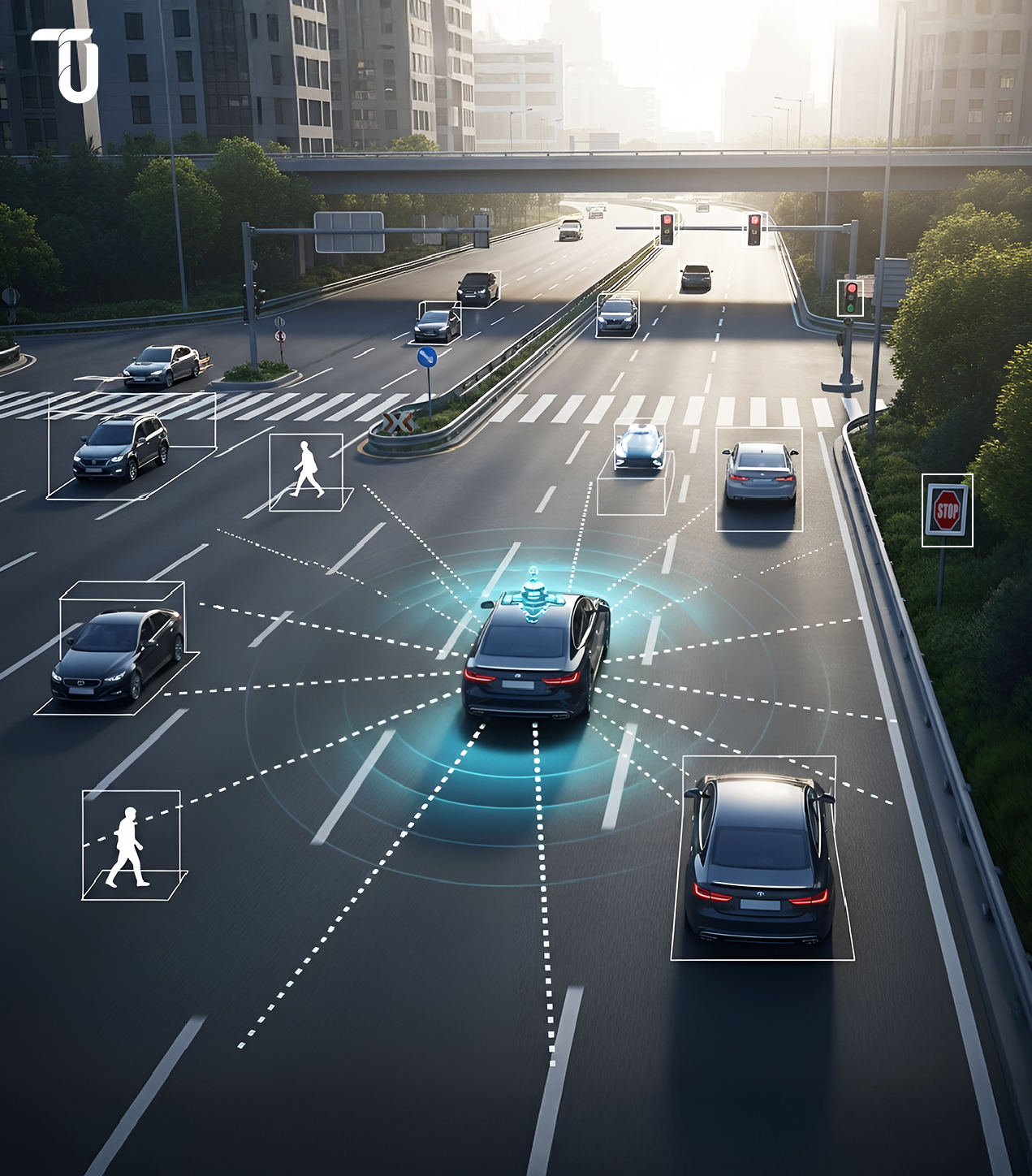

Imagine a vehicle that can see its surroundings, analyze every object in its path, and react in milliseconds. That’s the power of AI object detection in autonomous vehicles. From identifying a pedestrian about to cross the street to recognizing a traffic cone half a block away, this technology enables cars to make smarter, faster, and safer decisions.

At the heart of it is AI image detection software—systems trained to interpret visual data just like a human driver, only better. Using AI image labeling and deep learning, these models continuously learn to detect, classify, and prioritize road objects. The result? Safer navigation, fewer errors, and near real-time response to dynamic road scenarios.

Key Technologies Powering Object Detection (Lidar, Cameras, Radar, Deep Learning)

For real-time object detection, it’s not just about having cameras on a vehicle—it’s about building a vision stack that works like a high-performance brain. Most AVs rely on a fusion of sensors:

- Lidar to map the environment in 3D

- Cameras paired with AI image detection tools for visual accuracy

- Radar for depth and distance perception

- AI image recognition software and AI recognition software for real-time classification

- Face detection and recognition systems (in-cabin or for driver monitoring) are also emerging to enhance passenger safety and driver attentiveness.

Deep learning models trained on millions of road scenarios enable AI image recognition to detect not just objects, but intent, like predicting if a cyclist is about to turn or a pedestrian might jaywalk.

This synergy between hardware and AI object recognition algorithms turns passive data into actionable insight. And for C-suite leaders, it’s not just about tech sophistication—it’s about building trust in autonomy, one frame at a time.

Critical Safety Challenges in Autonomous Driving

Autonomous vehicles operate in an unpredictable world—one filled with jaywalking pedestrians, unexpected debris, erratic drivers, and blind intersections. Traditional ADAS systems often rely on predefined rules and static programming. The result? A limited ability to adapt to real-world variables.

That’s where AI object detection changes the game.

Unlike legacy systems, AI image detection tools can analyze road conditions in real time, identify potential threats, and trigger immediate action. Whether it’s a misplaced traffic cone or a cyclist weaving through lanes, modern AI image recognition software and photo recognition software enable vehicles to interpret and respond faster than any human or static codebase ever could.

The lack of real-time object detection is one of the most significant gaps in legacy autonomous vehicle (AV) technology, often leading to missed hazards and delayed reaction times. The reality? Every millisecond counts on the road.

The Role of Real-Time Detection and Response

Autonomous safety isn’t just about seeing—it’s about reacting fast. With AI recognition software and AI image detection, AVs can process high-resolution inputs from multiple sensors at lightning speed. Deep learning algorithms work in sync with AI object recognition models to flag anomalies, track object movement, and make split-second navigation decisions.

More importantly, these systems are constantly learning. Using AI image labeling, vehicles are trained on diverse road conditions—from heavy rain to low-light scenarios—so they don’t just detect objects, they understand the driving context.

Face detection and recognition systems are also becoming critical in driver monitoring, ensuring that even semi-autonomous systems can detect drowsiness, distraction, or human override signals in time.

This is the difference between a car that drives itself and a car that drives safely. For CTOs and CEOs betting on AV technology, safety is non-negotiable. And image recognition using AI is the strategic edge that bridges the gap between automation and trust.

Also Read: How TenUp Developed an AI-powered Real-time Platform

Ready to build smarter, safer mobility solutions?

Let’s co-create your next-gen object detection system tailored for real-world conditions.

How Object Detection AI Enhances Vehicle Safety?

Autonomous vehicles share the road with the most unpredictable elements—humans. Pedestrians walking between parked cars, cyclists overtaking from the blind side, children darting across crosswalks. For AVs to be truly safe, AI image detection must go beyond basic object spotting.

Modern AI image detection tools use high-definition sensors and AI object recognition models to detect human motion patterns with remarkable precision. Whether someone is mid-step or just about to cross, AI image recognition software and photo recognition software predicts and reacts in real time—reducing false negatives and improving pedestrian safety exponentially.

Early Hazard Identification and Collision Avoidance

Safety isn't reactive anymore. With real-time object detection, AVs are becoming proactive decision-makers. The system doesn’t wait for an object to appear in the lane—it constantly analyzes surroundings, even spotting partially visible or fast-approaching objects with AI image detection software.

Here’s where AI recognition software makes a significant impact:

- Detecting road debris before it becomes a threat

- Identifying stopped vehicles beyond line of sight

- Anticipating potential collisions and initiating avoidance maneuvers autonomously

Emerging features like face detection and recognition—especially in semi-autonomous or shared mobility vehicles—can help monitor driver attention or verify identity for enhanced security.

This level of foresight is what makes AI object detection, image recognition, and predictive modeling not just useful, but mission-critical for autonomous safety.

Adaptive Driving in Complex Environments (Weather, Traffic, Unexpected Obstacles)

Roads are rarely perfect. Rain, fog, snow, and construction zones—each brings visibility and navigation challenges. With image recognition using AI, autonomous systems can adapt in real time. They don’t rely solely on pre-programmed maps—they learn and re-learn the environment continuously.

Thanks to AI image labeling, deep learning, and image detection algorithms, the vehicle can differentiate between a shadow and a pothole, a plastic bag and a solid object. This allows for context-aware navigation, even in unfamiliar or high-risk environments.

Advanced systems also leverage photo recognition software to enhance the vehicle's ability to classify environmental elements under poor visibility—foggy road signs, snow-covered lane markings, or obstructed signals.

In-cabin face detection and recognition capabilities are also being explored to monitor driver alertness in Level 2 or Level 3 autonomous systems—providing an additional layer of situational awareness in shared control environments.

The result? Safer driving, not just in theory, but in every turn, lane change, and stop.

For forward-looking C-suite leaders, this is where the competitive edge lies. It’s not just about putting a driverless vehicle on the road—it’s about putting a safe, intelligent, and scalable AV system out there, powered by enterprise image recognition and segmentation services and real-time image detection. If you're exploring enterprise computer vision solutions , building a robust tech stack is crucial.

Proven Benefits and Impact on Autonomous Vehicle Performance

When safety meets speed and precision, performance takes a leap forward. That’s exactly what AI object detection delivers for autonomous vehicles—not just smarter driving, but measurable, bottom-line impact.

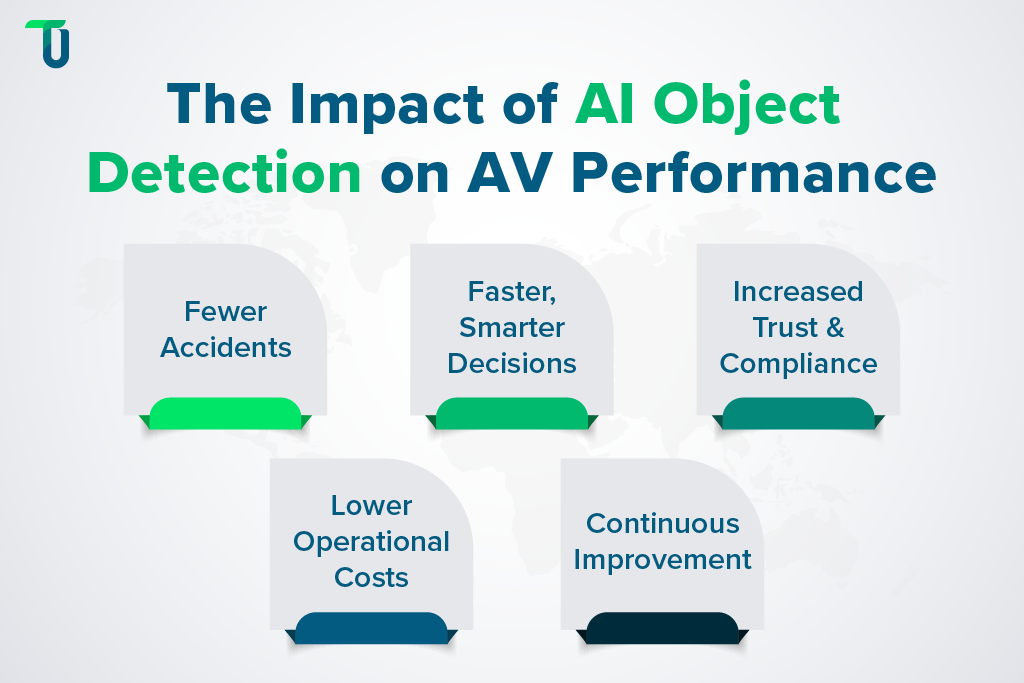

Reduction in Accident Rates and Near Misses

The biggest win? Fewer accidents.

With AI image detection tools constantly scanning for threats and reacting instantly, vehicles avoid potential collisions long before a human driver could. Real-world use cases show a significant drop in crash rates after implementing AI object recognition, photo recognition software, and real-time object detection—especially in urban and high-traffic zones.

Improved Decision-Making Speeds and Accuracy

Milliseconds matter.

AI recognition software processes visual data from multiple sources—Lidar, radar, cameras—in parallel. By using AI image detection software, image recognition using AI, and real-time classification, vehicles make complex decisions (like rerouting, braking, or lane shifting) faster and with more accuracy than traditional systems.

Enhanced Passenger Trust and Regulatory Compliance

Trust drives adoption.

For AV startups and OEMs, building public confidence is key—and nothing builds trust like consistent safety. With AI image recognition software, AI image labeling, and transparent logging, vehicles can document and justify every decision made on the road. That transparency helps meet regulatory standards, win over skeptical passengers, and accelerate market entry.

In addition, face detection and recognition technology—particularly in shared or semi-autonomous vehicles—adds another layer of security and personalization that builds user trust.

Lower Operational Costs Through Smarter Navigation

Fewer errors mean fewer expenses.

With AI object detection, AVs avoid unnecessary braking, idling, and detours. This improves route efficiency, reduces wear and tear, and cuts energy consumption. Over time, AI image detection helps lower the total cost of ownership—especially at scale. To see how this translates into tangible business value, Explore Proven ROI from Logistics Image Segmentation.

Better Data Feedback Loops for Continuous Improvement

AVs don’t just drive—they learn.

AI image recognition systems collect thousands of data points every mile. This constant feedback loop, powered by AI image labeling, image detection, and machine learning, enables manufacturers to refine their performance, address blind spots, and continually improve software accuracy across their fleets.

Integrating Object Detection AI into Your Autonomous Vehicle Systems

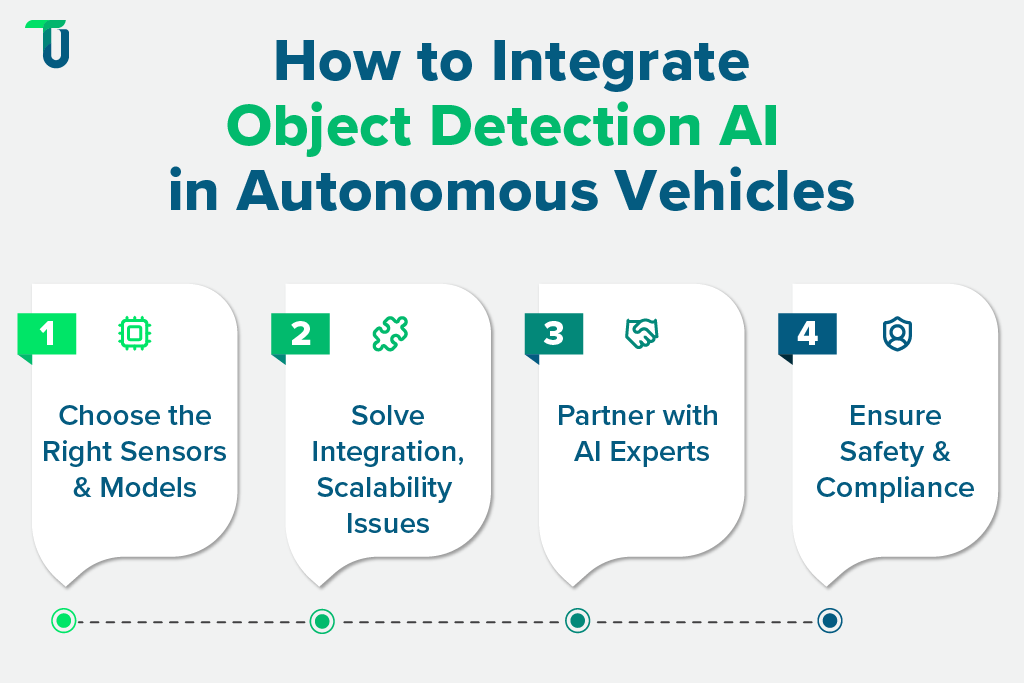

Building safer, smarter autonomous vehicles starts with asking the right questions—what to build, how to scale, and who to partner with. Integrating AI object detection isn’t plug-and-play; it’s a strategic move that defines long-term AV success.

Choosing the Right AI Models and Sensors for Your Fleet

Every vehicle is different. So are the environments they navigate.

For autonomous vehicles (AVs) operating in dense urban areas, the right combination of AI image detection tools, thermal cameras, and LiDAR sensors is essential. On highways, radar and AI image recognition software might be prioritized for long-range detection.

TenUp Software can bring deep expertise in sensor fusion and AI object recognition frameworks to help enterprises build robust detection models tailored to terrain, climate, and regional driving behavior.

Overcoming Integration and Scalability Challenges

Prototype to production is a steep climb.

The biggest hurdle? Getting AI image detection software to work seamlessly with your vehicle’s existing perception stack and control systems. Real-time object detection must be low-latency, power-efficient, and compatible with your compute infrastructure—whether that’s edge-based or cloud-augmented.

TenUp’s product engineering and cloud integration teams specialize in building scalable vision pipelines using AI image labeling and retraining loops, ensuring your system learns and adapts with every mile on the road.

Partnering with Technology Providers and AI Experts

Don’t build everything in-house.

The fastest-growing AV players are co-creating with AI recognition software vendors, computer vision labs, and cloud AI service providers. TenUp Software serves as a strategic partner, bringing cross-domain expertise in enterprise computer vision solutions ,computer vision, embedded systems, and DevOps.

Whether you're building an MVP or scaling globally, TenUp can help accelerate model deployment, sensor calibration, and backend orchestration with production-grade reliability.

Ensuring Safety + Compliance from the Ground Up

Integration isn’t just technical—it’s regulatory.

Deploying AI image detection and recognition requires consideration of privacy laws, regional traffic policies, and safety standards such as ISO 26262 or UNECE WP.29.

With a strong track record in regulated domains like healthcare and fintech, TenUp Software brings that same compliance-first mindset to AV safety—ensuring your AI image detection tools are built with transparency, auditability, and real-world readiness.

Real-World Applications of Object Detection AI in AV Safety

It’s one thing to build a smart detection model in the lab. It’s another to see it navigate a rainy highway, detect a jaywalking pedestrian, or slow down for a cyclist swerving into a lane. That’s where AI object detection proves its real-world value—in chaotic, unpredictable, human environments.

Let’s explore how different applications of AI image detection tools are solving real problems on the road.

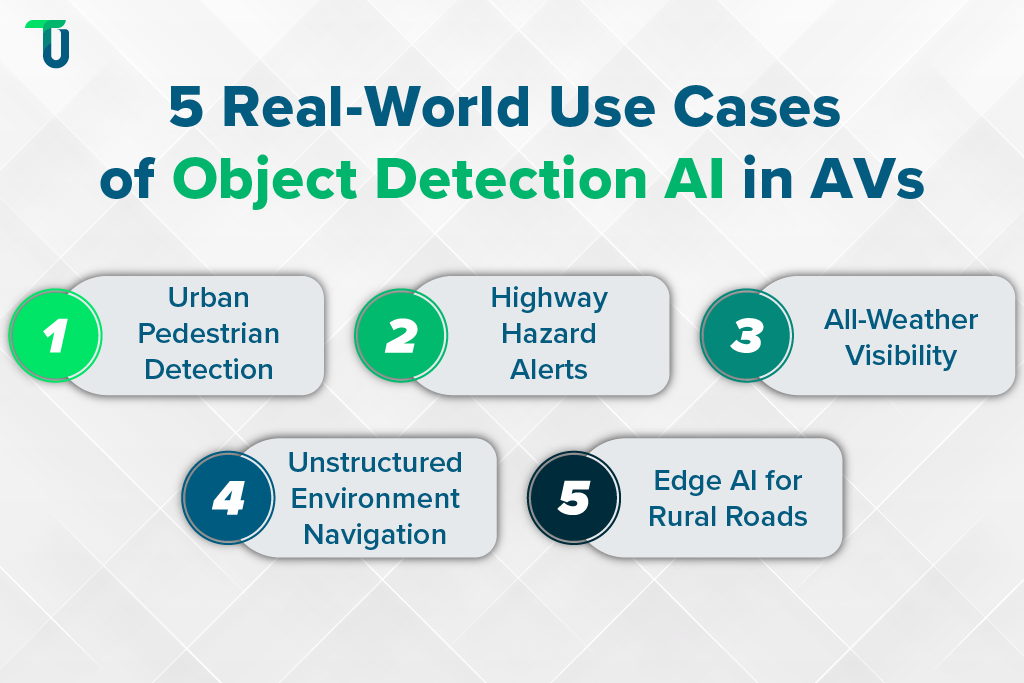

1. Detecting Vulnerable Road Users in Dense Urban Zones

In cities, autonomous vehicles must make split-second decisions around pedestrians, cyclists, e-scooters, and animals.

Using AI image detection software, AVs now classify these moving objects, even if partially occluded or in poor lighting. By combining AI image recognition software with depth estimation and motion tracking, vehicles can anticipate movement patterns and avoid accidents before they happen.

2. Dynamic Hazard Detection on Highways

From sudden tire debris to stopped vehicles in blind spots, highways present a different challenge: speed and surprise.

Real-time object detection systems powered by AI object recognition track lane changes, roadside obstacles, and erratic vehicle behavior. Combined with long-range sensors, these AI models enable AVs to execute evasive maneuvers without requiring human input.

In one of our projects, we build an AI-driven fish identification system. This Vision AI solution could run at the edge and deliver real-time insights—even in low-bandwidth, remote environments. It helped automate species recognition and trigger regulatory protocols instantly. Through this project, we realized how powerful edge-deployable models can be when designed for speed, precision, and adaptability.

3. Handling Adverse Weather and Low Visibility

Rain, snow, fog—they all degrade sensor performance.

But when AVs pair AI image labeling with thermal cameras and radar, visibility improves drastically. Object detection systems now identify lane markers through snow, recognize stalled vehicles in rain, and detect heat signatures of pedestrians through fog.

With multi-modal data fusion experience, TenUp engineers can help AV companies develop weather-resilient AI image detection tools that maintain accuracy when it matters most.

4. Interpreting Unstructured Environments Like Construction Zones

Standard lane-following won’t cut it when the road disappears.

In construction zones or off-road conditions, image recognition using AI enables autonomous vehicles (AVs) to spot cones, barriers, temporary signs, and even hand gestures from workers. These signals aren’t always standardized, so detection models must be trained with highly localized, labeled datasets.

TenUp’s AI teams specialize in training models on non-standard and multilingual signage. This can help make AV systems more adaptable across geographies and contexts.

5. Edge-First Object Detection for Remote and Rural Driving

Not all AVs operate in 5G-covered cities.

In rural areas, edge-optimized AI image detection software enables real-time decisions without cloud dependency. Detecting livestock, dirt road edges, or even fallen trees can save lives and prevent breakdowns in remote areas.

From urban mobility to cross-country logistics, every mile driven becomes smarter, safer, and more situationally aware. If you’re building the next generation of AV systems, this is your signal: object detection AI isn’t optional—it’s essential, and can be accelerated through custom computer vision software development.

From Detection to Direction: Why Now’s the Time to Build Safer Roads with TenUp

Autonomous vehicles are no longer just about getting from Point A to Point B. They’re about predicting the pedestrian about to jaywalk. From AI image labeling to real-time object detection, safety in AVs depends on how fast, how accurately, and how intelligently your systems can “see” the world. And that’s precisely where TenUp Software can help.

At TenUp Software Services, we don’t just build AI solutions—we engineer intelligence that scales.

We can help companies:

- Train detection models with precision using real-world, edge-case-rich datasets

- Build custom AI image detection tools that adapt to weather, traffic, and geography

- Deploy lightweight models at the edge, ensuring zero-lag decision making

- Integrate AI seamlessly into your existing stack

Our AI engineering teams specialize in end-to-end solutions—from model training and labeling to deployment and post-launch optimization. Whether you’re building urban robotaxis or autonomous freight fleets, we can help you get there faster, with fewer false positives and more confident decisions.

Safer roads don’t just happen. They’re engineered.

Let’s build the future of autonomous safety together—starting with smarter vision.

Ready to Make Autonomous Driving Safer, Smarter, and Scalable?

Partner with TenUp Software to build AI-powered object detection systems that see more, respond faster, and reduce risk on every mile driven.

Frequently asked questions

How accurate is AI object detection in real-world driving conditions?

AI object detection can reach 95%+ accuracy in ideal conditions, but real-world accuracy varies with lighting, weather, and occlusion. Combining deep learning with sensors like LiDAR and radar improves reliability in complex scenarios.

Can AI object detection reliably identify small or partially occluded objects?

Yes—modern AI models like YOLO and Faster R-CNN can detect small or occluded objects, especially when trained with attention mechanisms and context-aware data. Still, accuracy drops with severe occlusion or cluttered backgrounds, making high-quality training data and sensor fusion essential.

How is object detection evaluated and tested during development?

AI object detection is tested using benchmarks like COCO or KITTI and evaluated with metrics like IoU, Precision, Recall, and mAP. Teams also assess latency, real-time performance, and generalization to ensure accuracy across diverse driving scenarios.

Why deep learning instead of rule-based systems for object detection?

Deep learning models like CNNs outperform rule-based systems by automatically learning complex features, adapting to real-world variability, and delivering higher accuracy—especially in dynamic, ambiguous, or occluded scenarios. Rule-based methods lack flexibility and struggle in unpredictable environments.

Can detection accuracy be improved by tracking objects across video frames?

Yes—temporal tracking across video frames enhances detection accuracy by maintaining object continuity, reducing false positives, and improving recognition in motion or occlusion-heavy scenes—making it more reliable than frame-by-frame detection alone.

How are unique road signs or hand gestures handled in unstructured environments?

AI systems handle non-standard signs and gestures by using context-aware models, visual cues, and localized training data. In construction zones or unfamiliar regions, combining deep learning with sensor fusion helps improve recognition of hand signals, temporary signs, and dynamic hazards.