What Is Image-to-Video AI?

Image-to-video AI turns still visuals into motion sequences using deep learning models. You upload an image. The AI fills in the gaps—backgrounds, movements, transitions—and creates a video that looks human-made. No camera crew. No editing software. Just fast, automated results.

Core Technologies: Diffusion Models, GANs, Transformers

These tools are built on advanced tech:

- Diffusion models add realistic motion and detail frame by frame. Learn more about how Stable Diffusion works and where it's headed.

- GANs (Generative Adversarial Networks) help in style transfer and cinematic effects.

- Transformers improve context understanding and scene consistency.

Together, they create videos that feel organic, not stitched together.

Common Use Cases Across Industries.

Enterprises are already putting this to work:

- Marketing: Turning product shots into scroll-stopping ads.

- Gaming: Creating concept visuals and in-game animation prototypes.

- Retail: Auto-generating lifestyle reels from catalog images.

- Healthcare: Visualizing scans and simulations for training or education.

This is no longer future tech. It’s enterprise-ready now.

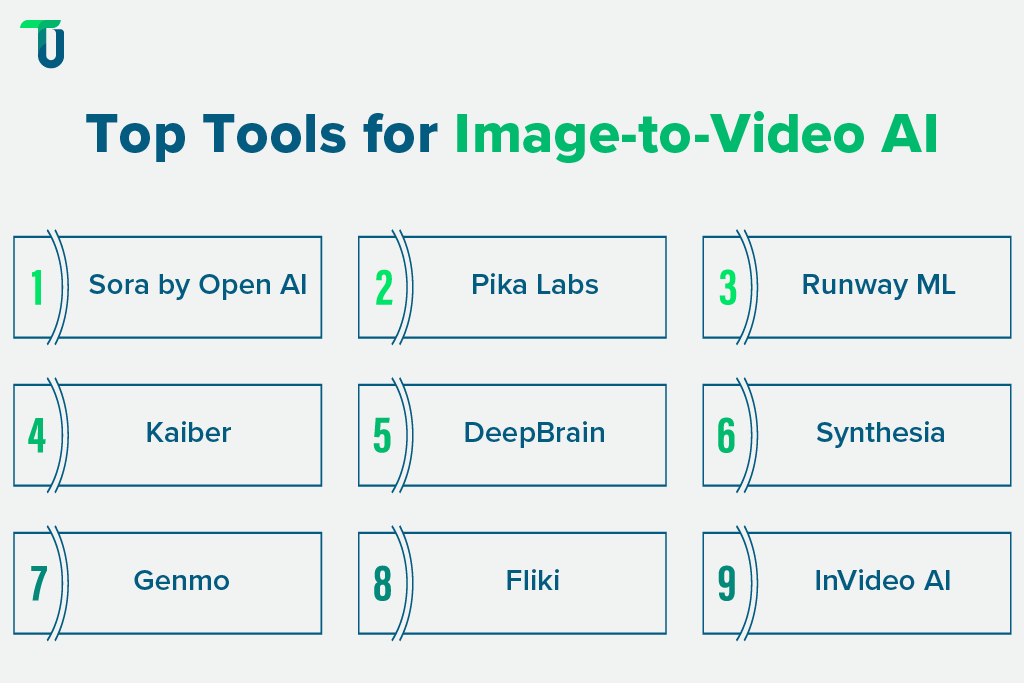

Top AI Image-to-Video Generators in 2025

Not every tool delivers enterprise-grade results. When evaluating image-to-video AI tools, here’s what matters most:

- Output Quality: Is it realistic? Brand-safe? On par with human editing?

- Speed: Can it produce usable content in minutes, not hours?

- Customization: Does it allow style control, voiceovers, aspect ratios?

- API Access: Is it easy to integrate with your content or product pipeline?

- Pricing: Are you paying for vanity features or real business value?

Before choosing a tool, ask:

- Is the video quality production-grade?

- Can it integrate into your tech stack?

- Will it scale with your content needs?

- Does pricing justify output?

- Do you need speed, control, or both?

Below are the top players making noise in 2025—and what they’re best suited for.

1. Sora by OpenAI

A powerful, multimodal video model that turns text, images, or concepts into highly realistic motion content. It’s built on state-of-the-art diffusion models with context-aware scene transitions.

Pros: Unmatched realism, deep contextual understanding, part of the OpenAI ecosystem

Cons: Still evolving, limited access, heavy compute load

Best For: R&D teams, innovation labs, enterprise experiments

2. Pika Labs

Pika is a creator-friendly AI tool focused on dynamic animation from static inputs. It supports creative storyboarding, camera motion, and background enhancements.

Overview: Fast and intuitive. Ideal for quick concept videos or animated explainers.

Strength: Great for animation, transitions, and visual storytelling

Best For: Marketing teams, digital agencies, product videos

3. Runway ML

A full-featured AI video editing suite. It blends text/image input with frame-by-frame editing, masking, and green screen capabilities.

Overview: Enterprise-ready with collaboration tools and manual override features.

Strength: Studio-grade quality with granular control

Best For: Creative teams, post-production units, branded content

4. Kaiber

Kaiber converts still images into music-driven video reels. It comes with ready-to-use styles and supports beat syncing for short-form content.

Overview: Built for speed and social. Great for TikTok, Reels, and brand teasers.

Strength: Auto-stylized outputs, rapid rendering

Best For: D2C brands, social teams, influencer partnerships

5. DeepBrain / Synthesia

These platforms specialize in avatar-based AI video. Upload an image, select a voice/language, and the system generates a talking head video.

Overview: Best for business communication, L&D, and internal explainers.

Strength: Supports multilingual voice sync, corporate branding

Best For: Training teams, HR, enterprise onboarding

6 Other Notables

- Genmo: Lightweight, browser-based tool for creative experiments and idea prototyping

- Fliki: Text-to-video generator with AI voiceover and subtitle support.

- InVideo AI: Combines script input with stock assets and basic transitions. Affordable and simple.

Turn Image-to-Video AI Into a Scalable Enterprise Capability

Choosing the right tool is only half the battle. TenUp helps you align tech with your goals—so you launch fast and scale smart.

Choosing the Right Tool: Key Questions to Ask

AI tools look impressive in demos. But not every platform fits enterprise needs. Before you commit budget or build around an API, ask these four questions:

What Are You Using the Videos For?

Are these videos for brand campaigns, internal training, product demos, or R&D? Clarity here drives your tool choice. High-impact brand videos need cinematic quality. Internal explainers can be simpler. Use case defines the bar.

Do You Need Full Creative Control or Speed?

Some tools give you editing layers, voiceovers, and motion tweaks. Others focus on speed—drop an image, get a reel in minutes. If your team needs control over tone, color, and transitions, choose accordingly.

Is API Integration a Priority?

If you’re planning to automate video generation at scale—via CMS, product platforms, or internal dashboards—API access is critical. Look for tools with mature, well-documented APIs that your developers can plug into fast.

What’s the TCO (Total Cost of Ownership)?

Subscription pricing is just the start. Add cloud storage, rendering credits, team licenses, and API usage. If you’re generating thousands of videos monthly, a low-cost tool can become expensive fast. Calculate the real cost to scale. Think beyond features. Focus on fit. The right tool saves time, drives consistency, and adapts as your content strategy evolves. Up next: What to do when off-the-shelf doesn’t deliver—buy or build?

Build vs Buy: The Generative AI Decision Framework

Most tools are built for the average user. But enterprises aren’t average. If your business requires niche outputs, strict compliance, or deep integration into workflows, off-the-shelf tools may not be sufficient.

Pros and Cons of Buying a Commercial Tool

Pros

- Ready to deploy

- No heavy upfront investment

- Frequent updates and support

- Fast results for non-technical teams

Cons

- Limited customization

- Data lock-in and vendor dependency

- Harder to control quality and consistency at scale

- API rate limits and usage caps

Pros and Cons of Building Your Own

Pros

- Full control over video logic, quality, and UI

- Can fine-tune models to brand tone or vertical use cases

- Better long-term ROI if scaled

- Keeps sensitive data in-house

Cons

- High upfront investment (infra + talent)

- Slower time to value

- Requires ongoing model maintenance

- Complex compliance and IP considerations

Questions Every CTO Should Ask Before Building

- Is our use case unique enough to justify a build?

- Do we have the in-house talent or budget to hire?

- Can we own and train on our own data?

- Will the platform need to evolve with our products?

Buy when you need speed and scale. Build when your needs are strategic, unique, and long-term. But don’t guess—validate with a pilot before going all in. Next, we’ll break down what it actually takes to build your own AI video stack.

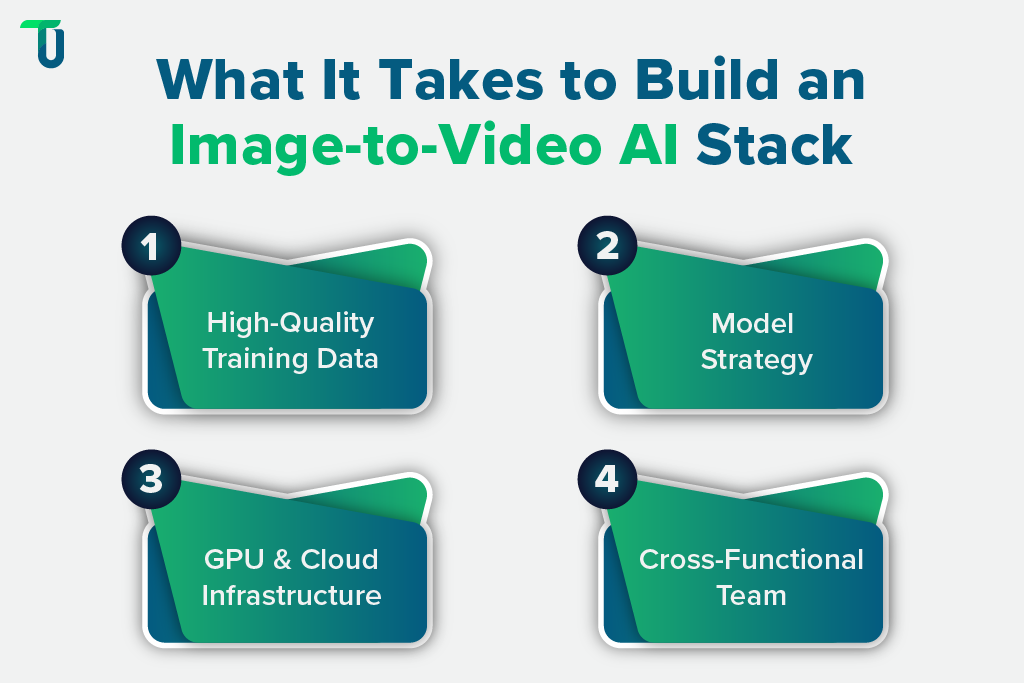

How to Build Your Own Image-to-Video AI Stack

Building your own image-to-video AI system gives you complete control over how content is created, styled, and scaled. But getting it right takes more than just downloading a model. Here’s what your team needs to prepare for.

1. Data Requirements: Sourcing, Labeling, and Ethics

Every good AI image to video system starts with data. You’ll need:

- Thousands of image-to-video pairs across different angles, motions, and lighting

- Clean metadata and annotations for accurate training

- Ethical sourcing to avoid compliance issues in production

If you’re also exploring image to image AI transformations as part of your pipeline, make sure those datasets are distinct and context-aware. Without high-quality inputs, your AI images to video output won’t meet enterprise standards.

Related read: Using the Right Data Labeling Tools and Approaches for AI Development Success

2. Model Selection: Open Source vs Proprietary

There are two main paths:

- Open-source models like AnimateDiff or Stable Video Diffusion are ideal if you want to customize or integrate image to AI video logic into your platform.

- Proprietary models can speed things up but may limit control over results.

Fine-tuning is crucial, especially when transitioning from basic image-to-image AI to dynamic image-to-video AI workflows.

Related Read: Open Source vs Proprietary LLMs: Which to Choose for Your AI Solution?

3. Infrastructure: Cloud Costs, GPU Resources, Scalability

Running a best-in-class image-to-video AI engine at scale isn’t cheap. You’ll need:

- Access to high-end GPUs like NVIDIA A100 or H100

- Cloud pipelines that can render thousands of videos in parallel

- Systems to manage latency and storage, especially when working with real-time AI chat with image integrations

Estimate both training and inference costs before you scale.

Related Read: Renting GPU for Scalable and Cost-effective AI Development

4. Team Composition: AI Engineers, Designers, MLOps

You’re not just building a model—you’re building a full-stack content engine. You’ll need:

- AI engineers to architect and optimize your image to videos AI models

- MLOps experts to handle deployment, automation, and monitoring

- Design and creative teams to guide branding and visual consistency

- A product or program lead to keep tech aligned with strategic goals

TenUp has delivered real-world Vision AI solutions across industries—from automated fish identification in smart fishing to real-time betting in casinos using real-time AI object detection.

If you're integrating AI chat with image features or adding AI image to image modules, your team structure may need to expand.

A custom image-to-video AI stack makes sense when content is a strategic differentiator. If you're running large-scale personalization, building immersive product visuals, or developing interactive tools that combine AI image to video with AI chat and imagery, this route offers control and long-term ROI.

Related Read: Explore our AI case studies to see our engineering in action.

Case Studies: Build vs Buy in the Real World

Seeing how other companies have tackled image-to-video AI decisions can help you benchmark your own. Here are a few real-world examples of what worked—and why:

Toys "R" Us – AI Brand Film with OpenAI’s Sora

Toys "R" Us teamed up with creative agency Native Foreign to produce a 66‑second brand film using Sora, OpenAI’s advanced generative video model. The ad narrates the origin story of founder Charles Lazarus, blending historical and fantastical visuals. It premiered at Cannes and sparked public debate, praised for innovation but also critiqued for its uncanny AI-rendered characters.

Why it matters:

- Showcases cinematic-quality image-to-video AI at scale

- Fast production turnaround and relatively low cost

- Mixed responses highlight the balance needed between tech and human storytelling

Kalshi (Sports Betting) – Viral AI-Generated Ad

Commercial director PJ Accetturo used Google’s Veo 3 (a generative video tool) along with AI crews like ChatGPT and Midjourney to produce a parody ad—“Puppramin”—for Kalshi during the NBA Finals. The project took just 3 days and ~$2,000, generating 18 million+ impressions and securing a commercial deal.

Why it matters:

- Demonstrates how image to video AI can accelerate go-to-market speed

- Low-cost, high-impact content creation for brands

Headway (EdTech) – 40% Lift in Ad Performance

Ukrainian ed-tech startup Headway used Midjourney, HeyGen and other generative tools to create ad content with animated visuals and dynamic voiceovers. This multimedia strategy led to a 40 % increase in ROI and 3.3 billion impressions in early 2024.

Why it matters:

- Effective use of AI image-to-video in performance marketing

- Enables rich touchpoints with global audiences at scale

TenUp has built scalable computer vision solutions, including a personalized, AI-driven image background removal and replacement solution with advanced shadow generation capabilities. See how enterprises build smarter with us.

Future Outlook: Where Image-to-Video AI Is Headed

Image-to-video AI is just getting started. What we’re seeing now is only phase one. The next wave will shift this tech from tool to core infrastructure. Here’s what your leadership team should be watching.

Predictive Content Generation

Tomorrow’s AI won’t just respond to prompts. It will anticipate them. Expect systems that auto-generate videos from usage patterns, CRM data, or live analytics. For example, a product image uploaded into your CMS could trigger an AI image-to-video module that builds a full product ad—no prompt needed.

Real-Time Video Creation with LLM Agents

Large language models (LLMs) will soon power autonomous agents that combine vision, text, and logic. That means:

- AI that chats with a user

- Analyzes their need

- Picks an image

- And runs an image to AI video pipeline—all in real time

This is where AI chat with image capabilities and image to video AI converge.

Compliance, Deepfake Risks, and Guardrails for Enterprises

As image-to-video AI becomes more realistic, regulatory pressure will grow. Expect frameworks for:

- Content traceability and watermarking

- Consent verification on uploaded images

- Risk scoring for AI-generated videos in sensitive domains like health, finance, or politics

Enterprise-grade images to video AI solutions will need built-in compliance layers.

Image-to-Image AI Will Feed the Pipeline

We’ll also see tighter integration between AI image-to-image tools and video generation. Imagine:

- Style transfer (image-to-image)

- Then motion rendering (image-to-video)

- Then delivery through chat or apps (AI chat with image)

This end-to-end pipeline will power everything from ads to training to customer support.

Not Just AI-Ready. AI-Built with TenUp Software Services

The future of video is not about editing timelines or studio shoots. It’s about smart systems that turn still images into motion content at scale. Whether you’re experimenting with AI image-to-video tools or building your own image-to-video AI engine, execution is everything.

At TenUp Software Services, we help enterprises go from concept to production with precision. Our AI engineering team combines deep learning expertise, scalable cloud architecture, and model tuning workflows specifically tailored to your industry.

From integrating off-the-shelf APIs to building custom image to videos AI stacks, we offer:

- Custom model development for diffusion, GAN, and transformer-based video systems

- End-to-end MLOps pipelines for training, deployment, and optimization

- Enterprise-grade compliance, observability, and security baked into every project

- Support for integrating AI chat with image, image-to-image AI, and other multimodal AI layers

If your team is thinking beyond proof of concept and is ready to operationalize AI video, we’re here to help you lead that shift. Let’s build something that moves, and see how we help businesses turn vision into velocity.

Don’t Just Use AI—Own It. Start Your Custom Image-to-Video Journey.

Build enterprise-grade image-to-video pipelines powered by custom AI models, scalable MLOps, and secure cloud infrastructure with TenUp.

Frequently asked questions

What file formats can I export from image‑to‑video AI tools, and which work best for social platforms?

Most AI video tools export in MP4, the universal format preferred by Instagram, TikTok, and YouTube. For the best results, use MP4 with H.264 encoding, as it ensures smooth playback, smaller file sizes, and full compatibility across platforms. Check each platform’s aspect ratio and video length guidelines to optimize reach.

How do different AI models (like diffusion vs GAN vs transformer) affect motion quality in generated videos?

Different AI models affect video motion in distinct ways.

- Diffusion models generate smooth, realistic frame-by-frame motion—ideal for cinematic output.

- GANs specialize in artistic effects and texture realism, though can introduce flicker.

- Transformers enhance temporal consistency, ensuring scenes flow logically.

What are the compute and GPU requirements to run enterprise‑grade image‑to‑video on‑premises?

Enterprise-grade image-to-video AI requires high-end GPUs like NVIDIA A100, H100, or RTX 6000 Ada, 64–128GB RAM, and fast SSD storage. For large-scale or real-time rendering, use GPU clusters or a Kubernetes setup. On-premise deployment is ideal for data-sensitive workloads, but cloud options may offer better cost-efficiency at scale.

How can I embed image‑to‑video within a CMS via API—are there standard integration templates?

You can embed image-to-video functionality in a CMS by connecting tools like Runway, Pika, or Kaiber via their REST APIs. Use your CMS API (e.g., WordPress, Contentful) to insert the generated video URL into posts. While there are no universal templates, tools like Zapier or Make simplify automation without code.

What intellectual property issues arise when generating videos from user‑provided images?

AI-generated videos based on user images can raise IP risks if the source content lacks clear ownership or consent. Videos may inherit likeness, copyright, or usage rights issues. To reduce legal exposure, businesses should enforce consent verification, usage terms, and transparent AI disclosures.

Can image‑to‑video AI tools generate consistent characters or branding across multiple clips?

Yes, image-to-video AI tools can maintain consistent characters and branding using reference images, embedding tokens, or fine-tuned models. Platforms like Sora, Runway, and Kaiber support continuity across clips, making them ideal for multi-episode stories or branded video series.

What are the latency and throughput benchmarks for real‑time image‑to‑video generation?

Real-time image-to-video generation typically takes 15–60 seconds for a 5-second 720p video on a single GPU. High-throughput setups using GPU clusters can generate dozens of videos per minute, but trade-offs in quality, resolution, and latency are still necessary for real-time performance.

Are there open‑source pipelines combining image‑to‑image style transfer with image‑to‑video animation?

Yes, open-source tools like AnimateDiff, Stable Video Diffusion, and ControlNet can be combined to apply style transfer before animation. Frameworks like ComfyUI and Hugging Face Diffusers support modular workflows that chain image-to-image and image-to-video models seamlessly.