How Image Recognition Works?

Before you invest time and tech into building your own image recognition system, you need to understand what’s happening under the hood.

At its core, image recognition—whether it's for face detection and recognition, identifying products on a shelf, or analyzing X-rays—is about teaching machines to "see" like humans. But unlike us, machines need mathematical clarity.

Let’s break it down.

From Pixels to Predictions: The Basics

Every image is just a grid of pixels. But when you run it through a trained model, that grid transforms into structured data. You get labels, bounding boxes, and heatmaps.

This transformation happens through a series of layers in a neural network. The deeper the layers, the better the system gets at detecting complex patterns like edges, textures, shapes, and objects.

Whether you're building photo recognition software for consumer apps or image detection tools for industrial use cases, this is your foundation.

Key Concepts: Classification, Detection, Segmentation

- Classification tells you what’s in the image. Is it a cat or a car?

- Image detection goes a step further. It tells you where the object is.

- Segmentation breaks the image down at the pixel level. It’s what you need if you want to draw boundaries around tumors in a medical scan or count ripe fruits in a smart farm.

Choosing the right approach here depends on your business goal and the KPIs you’ll measure later.

Role of Machine Learning and Deep Learning

Traditional ML techniques can get you part of the way. But deep learning, specifically Convolutional Neural Networks (CNNs), powers most modern face detection and recognition systems, autonomous vehicles, and real-time video analysis platforms.

Why? Because deep learning models don’t need hand-crafted rules. They learn patterns directly from data and improve over time.

So, if your goal is to build photo recognition software that actually adapts to new environments and edge cases, deep learning is not optional. It is your engine.

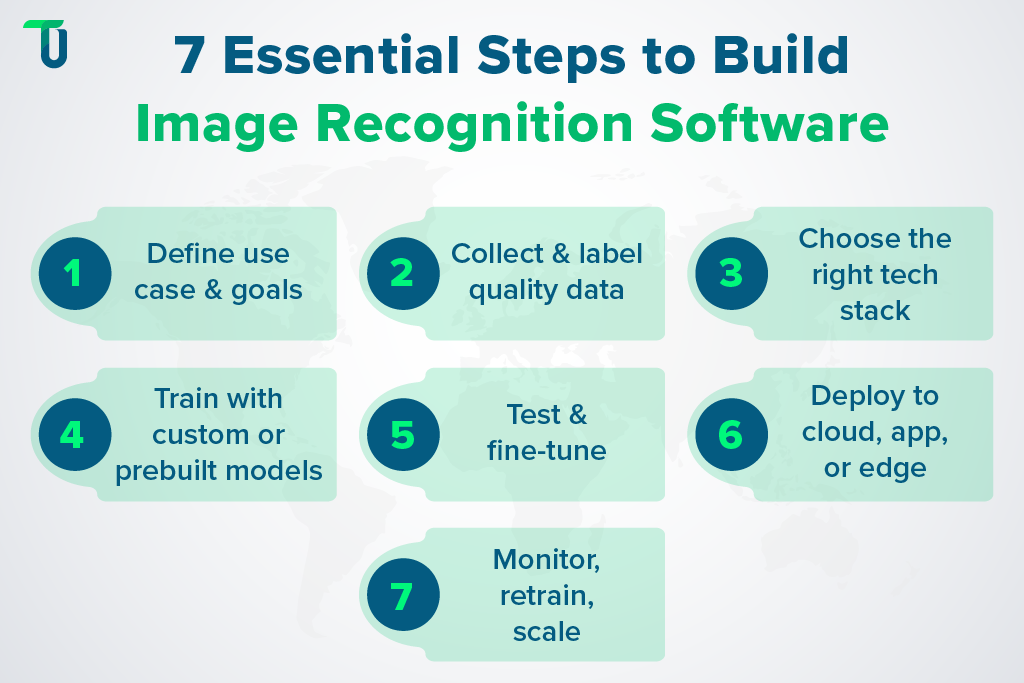

A Detailed Roadmap to Build Your Own Image Recognition Software

This isn’t a generic checklist. It’s a practical, decision-focused roadmap to help you move from idea to production-grade image recognition—built for your use case, on your infrastructure, with full control.

Whether you're building photo recognition software for a customer-facing product or an internal image detection system for operations, this roadmap covers every key milestone you’ll need to hit, including leveraging image segmentation solutions with measurable ROI to drive real-world impact.

Step 1: Define Your Use Case and Objectives

Start with the problem, not the tech.

- What exactly are you trying to recognize?

- Objects, faces, documents, defects, text

- What does success look like?

- Accuracy thresholds, processing time, deployment constraints

- Are there industry-specific KPIs to consider?

- Healthcare will demand clinical-grade precision

- Retail may focus more on speed and scale

Get specific. A clear use case sets the direction for everything that follows—from dataset selection to architecture choices.

Step 2: Gather and Prepare Your Dataset

No good model survives bad data.

- Where will your images come from?

- Use public datasets for bootstrapping (e.g., COCO, ImageNet)

- Build custom datasets for domain-specific recognition tasks

- Label your data accurately.

- Use annotation tools like CVAT, Labelbox, or V7

- Clean and augment.

- Remove noise, balance your classes, and simulate edge conditions with rotation, blur, lighting variations

For robust face detection and recognition systems or specialized industrial use cases, invest time in high-quality annotations. It pays off later.

Step 3: Choose the Right Tech Stack

The right tools will make or break your timeline.

- Languages: Python is the go-to, but JavaScript works for lightweight deployments

- Frameworks: TensorFlow, PyTorch, Keras for deep learning. OpenCV for preprocessing and traditional computer vision tasks

- Infrastructure:

- GPUs for training

- Cloud for scalability

- Edge devices for real-time, offline inference

Your stack should match your deployment needs, not just your dev team’s familiarity.

At TenUp, we build mobile apps with AI-powered fish species detection capabilities. Explore the complete case study here.

Step 4: Train Your Image Recognition Model

This is where your system starts to take shape.

- Use pre-trained models like YOLO, ResNet, or MobileNet if you need speed and efficiency

- Build from scratch for highly custom photo recognition software

- Tune your model using the right loss functions and optimizers

- Track key metrics: accuracy, precision, recall, F1 score

Training is iterative, and without structured Image annotation and retraining workflows, the first version often remains just a baseline. Don’t aim for perfection—aim for continuous improvement.

For example, we used GPT-4 to train a multimodal image QC system that flagged defects in 4K automotive photos, delivering 10 times faster results with domain-specific tuning. Read the case study.

Step 5: Test and Fine-Tune for Real-World Accuracy

Lab accuracy means nothing if it fails in production.

- Test on real-world inputs with noise, blur, or occlusion

- Fine-tune with transfer learning and hyperparameter optimization

- Detect and fix overfitting early. If your model aces training data but struggles on test sets, it’s not ready

Use test cases that reflect the worst-case scenarios, not just ideal conditions.

Step 6: Deploy Your Image Recognition System

Deployment isn’t an afterthought. It’s where most real-world friction happens.

- Choose your environment: on-premise, cloud, edge, or mobile

- Decide how to serve the model:

- REST APIs

- Embedded into web or mobile apps

- Real-time streaming pipelines

- Build for low latency, high reliability, and easy rollback

If you're deploying a face detection and recognition feature into a customer-facing app, every millisecond matters.

For example, TenUp deployed the solution into the client’s existing workflow. AWS Lambda processed images in parallel, feeding results back into an internal dashboard for auto dealers. Batch processing reduced API usage and kept costs down.

Step 7: Monitor, Improve, and Scale

The work doesn’t end at deployment. That’s where it begins.

- Monitor model drift, edge failures, and false positives

- Set up feedback loops for continuous learning

- Version your models and automate retraining pipelines

- Scale horizontally across devices or locations as demand grows

Success isn’t shipping a model. It's a running one that keeps improving with time.

Related Read: Why You Need LLM Evaluation and Observability

Build It Right. Scale It Fast. Own It Fully.

We’ve helped enterprises scale image recognition across millions of assets. Yours could be next. Schedule a discovery session today!

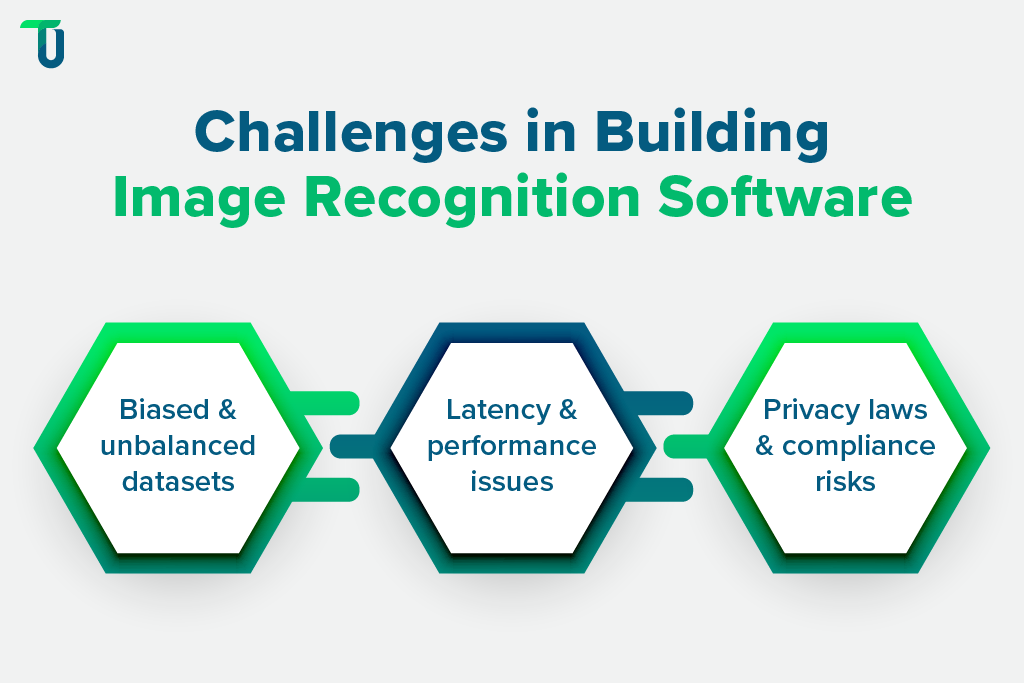

Challenges to Watch Out For When Building Image Recognition Software

Building your own image recognition system gives you control, but it also comes with its own set of risks. Most failures don’t happen in model training. They happen in assumptions, data quality, and system scaling.

If you want your photo recognition software or image detection system to survive in the real world, watch for these pitfalls early.

Biased Datasets and Ethical Considerations

Your model is only as fair as your data.

If your training dataset underrepresents certain groups or object types, you’ll end up with biased predictions. This is especially dangerous in sensitive use cases like face detection and recognition.

Before you ship anything:

- Audit your dataset for representation gaps

- Avoid using datasets scraped without consent

- Consider how predictions might impact users, especially in regulated sectors like healthcare or law enforcement

AI is powerful, but without checks, it can amplify bias at scale.

Performance Bottlenecks

Your model may look great in Jupyter Notebooks, but production demands more.

- Latency becomes critical when you’re running inference on mobile or edge devices

- Throughput matters if you’re processing thousands of images per second in a live stream

- Memory and compute costs can spiral if your system isn’t optimized

Use lightweight architectures or model quantization when deploying image detection models on low-resource environments. And always benchmark in real-world conditions, not just your dev laptop.

Compliance with Privacy Laws

If your system involves human faces, identifiable objects, or personal data, you're in regulated territory.

- GDPR in Europe

- HIPAA in the US for healthcare data

- Local data residency laws in APAC and the Middle East

Building your own image recognition solution means you’re responsible for where and how data is stored, processed, and transmitted.

Ensure:

- Explicit consent of collecting images from users

- Proper anonymization or masking

- Secure model endpoints with encryption and role-based access

Neglecting compliance is a fast way to lose customer trust and face penalties.

Tackle these challenges early and intentionally. They’re not just technical blockers. They directly impact ROI, user trust, and long-term viability.

Success Stories and Real-World Use Cases of Custom Image Recognition

f you're evaluating custom image recognition or face detection and recognition as a strategic investment, here are real-world success stories delivering measurable ROI in retail, security, healthcare, and logistics — including AI logistics solutions for moving companies that automate item profiling and condition reporting for on-ground moving crews.

Amazon Go – Checkout-Free Stores

Amazon Go utilizes a combination of image recognition, sensors, and RFID tags to facilitate seamless “grab and go” shopping. Customers simply walk out with their items, and Amazon automatically charges them. This reduces labor costs and improves inventory tracking.

Oosto – Real-Time POI Alerting

Oosto’s software enables real-time identification of persons of interest in crowded venues like sports arenas and corporate campuses. Security teams receive alerts within milliseconds, improving situational awareness.

BakeryScan - Medical Imaging

BakeryScan was first built to identify pastries in Japanese bakeries. It was later adapted to detect cancer cells in pathology slides with over 98 percent accuracy. This cross-domain application demonstrates how image recognition can serve both retail and healthcare sectors.

Pinterest’s “Shop The Look”

Pinterest built its own photo recognition software to let users click on any item in an image and shop directly. Their AI pipeline increased user engagement by more than 80 percent.

Michael Kors – Personalized Online Experience

Michael Kors uses visual recognition and AI to personalize shopping experiences. From product recommendations to smarter inventory management, the results include higher conversion rates and increased customer satisfaction.

Why Build When You Can Build It Right?

Off-the-shelf tools give you speed. But building your own image recognition system gives you control, precision, and long-term value. The only question left is—can you do it right the first time?

That’s where TenUp Software Services comes in.

We don’t just write code. We build production-grade AI systems that solve real business problems across industries like manufacturing, healthcare, retail, and logistics. Our team brings deep expertise in:

- Custom image detection and photo recognition software

- Advanced model training using TensorFlow, PyTorch, and OpenCV

- Edge AI, cloud-native deployments, and full-lifecycle DevOps

- Compliance-ready builds with HIPAA, GDPR, and SOC2 considerations

- Scalable MLOps pipelines that keep learning from your data

Whether you’re building a face detection and recognition feature for secure access, a defect detection system for your factory, or a personalized retail experience based on visual search, or even image segmentation solutions with measurable ROI , our AI engineers, cloud architects, and product strategists can help you build what your business needs.

Because building in-house doesn’t mean building alone.

Explore how TenUp Software Services can help you turn your vision into a market-ready AI solution.

Build Custom Image Recognition Software with TenUp

Your data is unique. Your AI should be too. Work with our team to turn raw images into real-time, production-grade insights.

Frequently asked questions

What are the signs that I need a fully custom image recognition system instead of fine-tuning an API?

If you're working with rare objects, strict accuracy needs, edge deployments, or compliance requirements, a custom model is likely better. APIs often fall short on domain-specific performance, flexibility, and cost at scale.

How much data do I actually need to train a custom image recognition model for uncommon classes?

For uncommon or nuanced classes, aim for 500–1,000 high-quality, labeled images per class, depending on class variability and model complexity. With transfer learning, strong results can often be achieved with just 200–500 images per class, especially when the domain is close to the pre-trained model.

What latency targets should I aim for in real-time image inference at the edge?

Aim for:

- < 100ms for live video

- < 50ms for AR/VR or autonomous systems

- 20ms for mission-critical tasks like healthcare or security

Lower is better—optimize with model compression, edge hardware, and fast inference libraries.

How can I monitor drift and retrain continuous models without manual intervention?

Use an MLOps pipeline with tools like EvidentlyAI or WhyLabs to detect drift in data or performance. Automate retraining triggers via MLflow, Kubeflow, or cloud workflows when metrics cross thresholds. This enables hands-off, self-healing model updates.

What are the tradeoffs of using transfer learning vs. building a model from scratch?

Transfer learning is faster, needs less data, and works well for common tasks, but may underperform on niche or complex domains. Building from scratch offers full control and potentially higher accuracy, but demands more data, compute, and expertise.

What are practical strategies for annotating niche-domain image data cost‑effectively?

Combine active learning with open-source tools (e.g., CVAT, Label Studio) and microtask platforms like Amazon SageMaker Ground Truth. Semi-automated labeling with human review also reduces costs without compromising quality.

How do privacy regulations (GDPR/HIPAA) influence on‑device image recognition architecture?

GDPR and HIPAA require user consent, data minimization, and local processing. On-device image recognition supports compliance by keeping data off the cloud, reducing exposure, and maintaining regional data residency.